How Product Architecture Can Revolutionize The "Boring" Field of Data Pipelines

Product is not only UI/UX and customer workflows. Innovation can come also from the technical implementation. Beware, technical post.

Product design goes beyond UI/UX. This article dives into product architecture, the underlying structure that shapes how users interact with the product. Think of it as the difference between a a product that is drag-and-drop and a product that requires coding. There’s a place for both sometimes.

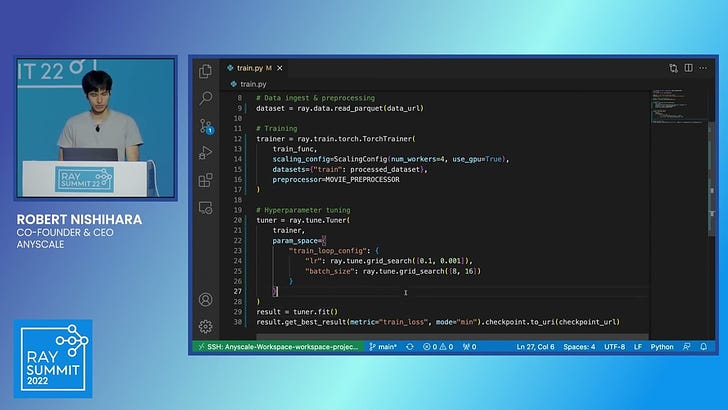

This article is about Ray, a tool for building data pipelines that “magically” optimizes the data pipeline code to be more efficient. See below for a simplified example.

Shaul here. This is the first article in my blog, aimed at product managers and future product managers, looking to extend their horizons and improve their craft. I plan to make the articles short (<10 minutes to read) and practical. I'm excited to start it, and I hope you'll enjoy the read :)

Let's start with the final conclusions, or TLDR:

Imagine a future where compilers can automatically optimize your code.

Consider this code:

input1 = ...

result1 = func1(input1)

result2 = func2(input1)

func3(result1, result2)This code hides a hidden opportunity. The compiler could detect that

func1andfunc2are independent (no data dependencies) and automatically parallelize them.

This isn't science fiction. It means:

Faster execution: Running tasks simultaneously cuts down overall runtime.

Cloud power: Spawn server instances on the fly to handle the parallel workload.

But wait, there's more!

What if result1 and result2 are massive (10GB each)? Traditionally, func3 would wait for both results before starting. But with a smarter compiler, it could process data as it becomes available from func1 and func2, further squeezing out every drop of performance.

The impact? Code that runs significantly faster, utilizes cloud resources efficiently, and unlocks a new level of performance optimization.

Now that’s a major change in how data pipelines operate that can lead to great improvement in speed and cost saving.

That’s the essence of what Ray provides, and we will go over it in this article, and discuss its benefits.

But don't worry if this sounds too technical. We'll start from the basics, exploring existing tools in the market, understanding how they operate, and then seeing how Ray stands out.

We'll go over:

What are ETL tools (the general category of this kind of software)

What are ML pipeline tools

Typical Machine Learning Pipeline Flow

Problems in current ML pipeline flow

Ray and how it solves these problems by being designed differently

Conceptual Code Example

Competing Technologies

Origins of Ray

Links to youtube videos for further learning

What are ETL Tools?

ETL (Extract, Transform, Load) tools are essential for data integration, enabling the extraction of data from various sources, transforming it into a suitable format, and loading it into a target database or data warehouse. These tools automate complex tasks, ensuring data consistency, accuracy, and accessibility. ETL tools are vital in business intelligence and data management strategies and can be broadly categorized into:

Simple drag-and-drop no-code tools: Examples include SSIS (SQL Server Integration Services) and Azure Data Factory.

Programmatic tools: Apache Airflow, which uses code to create pipelines.

What are ML Pipeline Tools?

Imagine building a recipe for success with machine learning. In the past few years, new tools have appeared to help you with each step, not just the machine learning part. These tools, called ML pipeline tools for short, are like a kitchen for data science.

ML pipeline tools help manage the entire process of creating a powerful machine learning model, from gathering ingredients (data) to cooking it up (training) and making sure it tastes great (monitoring). This frees up data scientists, the cooks in this analogy, to focus on perfecting the recipe (fine-tuning) and discovering new dishes (finding insights). By automating repetitive tasks, these tools make building and using machine learning models faster, more reliable, and easier for everyone.Examples include Kubeflow, Red Hat Data Science (based on Kubeflow), and SageMaker.

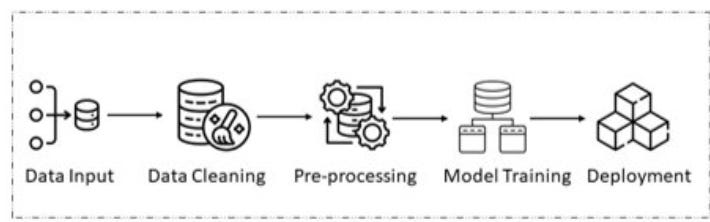

Typical Machine Learning Pipeline Flow

Here’s a simple flow of data in an ML (machine learning) pipeline.

Image Credit: https://www.design-reuse.com/articles/53595/an-overview-of-machine-learning-pipeline-and-its-importance.html

A typical ML pipeline starts with data input, followed by steps for cleaning, preprocessing, training, and ultimately deploying the model. These can be done partially in the ETL and some in the ML pipeline. However, this conventional approach has notable drawbacks.

Problems with Common ML Pipelines

As explained by Prof. Ion Stoica (see video link at end of this post), one of Ray’s founders, traditional ML pipelines face several challenges:

Scaling Complexity: Each step (e.g., preprocessing, training, tuning, serving) requires separate scaling on different machines, which is both labor-intensive and inefficient. Scaling each step separately requires a lot of thinking about scaling mechanisms, monitoring, failsafe mechanisms, etc. In fact, we can list sometimes 5-10 available tools for doing each of the steps. That’s a lot to learn, a lot to deploy and a lot to scale. Too much work for ML engineers.

Data Transfer Bottlenecks: Moving enormous amouts of data data between stages necessitates writing and reading from persistent storage, which is slow and cumbersome. What if we didn’t really need to write and read to persistent storage 10GB at every step twhen going between the steps? It’d be much faster.

Prebuilt for batch processing, cannot do real-time: due to the above constraints, the scaling issues (which can cause pipelines to run slower to save costs) and the data transfer issues (which also can make things slower and more expensive) - it is difficult to imagine an ML pipeline running in real-time. But sometimes there is a business need to run everything in real-time, in response to a user request.

What is Ray and how it solves these problems using a different architecture

Ray revolutionizes the ML pipeline by consolidating all stages into a unified Python-based system. According to Robert Nishihara, CEO of Anyscale (Ray’s commercialization company), this integration eliminates the traditional fragmentation with persistent storage between stages. Instead, Ray leverages a Linux-like pipe mechanism to directly pass data across stages, enabling real-time processing and minimizing latency. This approach reduces reliance on complex technologies like Apache Spark (co-founded by Ion Stoica, a Ray founder), offering flexibility and effortless scalability. By dynamically allocating backend tasks, Ray optimizes resource usage, ensuring efficiency across diverse workloads.

Novelty of Ray - a summary:

Unified Python Code: Ray consolidates all ML pipeline stages into Python code, making distributed computing as simple as writing single-node Python code.

Dynamic Resource Allocation: Ray can dynamically allocate resources, such as launching and shutting down multiple EC2 instances as needed, based on the demands of the pipeline.

Concurrent Execution: By creating a "pipe" between adjacent steps, Ray allows for concurrent execution, significantly speeding up the process.

Conceptual Code Example

Let’s consider this code again:

input1 = ...

result1 = func1(input1)

result2 = func2(input1)

func3(result1, result2)See the hidden opportunity? The compiler could detect that

func1andfunc2are independent (no data dependencies) and automatically parallelize them.

This isn't science fiction. It means:

Faster execution: Running tasks simultaneously cuts down overall runtime.

Cloud power: Spawn server instances on the fly to handle the parallel workload.

But wait, there's more!

What if result1 and result2 are massive (10GB each)? Traditionally, func3 would wait for both results before starting. But with a smarter compiler, it could process data as it becomes available from func1 and func2, further squeezing out every drop of performance.

Competing Technologies

Several technologies exist in the realm of distributed systems, each with unique strengths:

Kubeflow: Simplifies ML workflow deployment on Kubernetes but focuses on orchestration rather than general distributed computing.

Apache Airflow: Highly flexible for authoring, scheduling, and monitoring workflows but not designed for real-time or large-scale distributed computing.

Dask: Scales Python code from a single machine to a cluster, integrating well with the PyData ecosystem but lacks some advanced features of Ray.

Apache Spark: A powerful analytics engine for big data processing, primarily focused on batch processing, which can be complex to manage. It is more for the first step of the ML pipeline, not the more complex model running steps.

None of the above offer the flexibility of Ray and the ability to create truly distributed systems, as in the “code” example above. They can all be used for batch processing of workloads, without the important points in which Ray can be optimized, as in the example above.

Origins of Ray

Ray was developed by researchers at the University of California, Berkeley's RISELab, led by Robert Nishihara and Philipp Moritz. The project emerged from the need for a flexible, easy-to-use system for distributed computing, capable of supporting a wide range of applications from large-scale data processing to reinforcement learning. The goal was to create a general-purpose distributed computing platform that was both powerful and user-friendly, addressing the limitations of existing solutions.

In conclusion, Ray offers a revolutionary approach to distributed ML processing, providing seamless integration, dynamic resource allocation, and real-time data processing capabilities. Its unique features make it a compelling alternative to traditional ML pipeline tools, driving efficiency and scalability in machine learning operations.

Links

Ray presented by Robert Nishihara, Anyscale CEO

Anyscale is the company spawned to commercialize Ray, and offers Ray as a cloud service. It is a very insightful and interesting talk.

Robert is a former PhD student in Berkeley’s RISE laboratory, where Ray was developed.

Ray presentation by Ion Stoica

Ion Stoca is a professor at UC Berkeley and one of the Ray cofounders.